This post is my personal study technical note DELL EMC VxRail Hyperconverged Solution drafted during my personal knowledge update. This post is not intended to cover all the part of the solution and some note is based on my own understanding of the solution. My intention to draft this note is to outline the key solution elements for quick readers.

The note is based on VxRail version 4.7. Some figures in this post are referenced from DELL EMC VxRail public documents.

The note is the second part of the whole note. The first post is per the below link:

DELL EMC VxRail Study Notes (Part 1)

Storage efficiency

Deduplication and Compression

- Inline when data is de-staged from the cache to the capacity drives, no host IO latency incur.

- 4K block

- Deduplication algorithm is applied at the disk-group level

- A single copy of each unique 4K block per disk group

- LZ4 compression is applied after the blocks are deduplicated and before being written to SSD.

Erasure coding

- Only All-flash VxRail uses erasure coding (performance overhead when rebuilding).

- FTT=1: single-parity data protection (RAID-5), min 4 nodes.

- FTT=2: double-parity data protection (RAID-6), min 6 nodes.

- Defined in storage policy and applied to the required VM.

Data Resiliency

Disk Level

- Depends on the FTT level. (FTT is defined in storage policy and applied to VM)

- Tolerate multi-disks failure on the same node.

- Multi-disks failure on multi nodes may result data lost.

Node Level

- Depends on the FTT level.

Fault Domain

- Fault domains is a way to configure tolerance for RACK and SITE failures

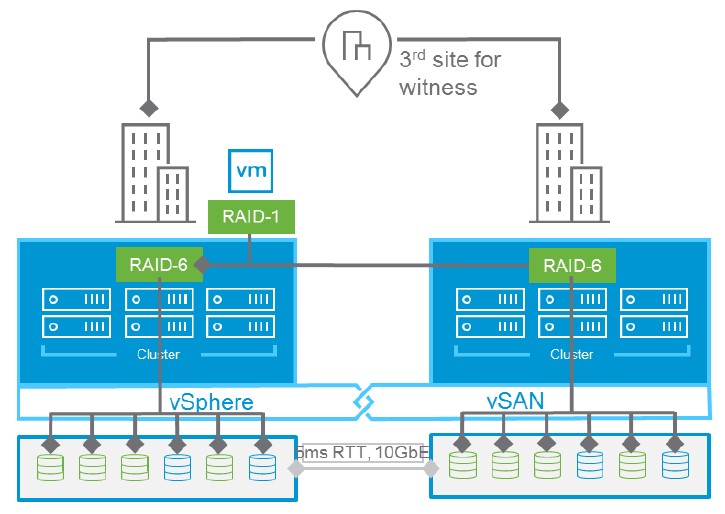

Stretched Cluster

- Single cluster across two sites, one copy of data at each site.

- Since vSAN 6.6, supports Stretched Clusters with Local Protection.

- PFTT = RAID1 (Primary Site)

- SFTT = RAID1/5/6 (Secondary Site)

- 5ms latency between sites.

- Whiteness server is required.

- Min: 3+3+1 (7 nodes); Max: 15+15+1 (31 nodes).

- Support both hybrid and all-flash VxRail

- Both layer-2 (Data) and Layer-3 (Witness) network required.

- Witness is marked failure after 5 consecutive failures.

Network

General Rules

- All-flash system requires two 10/25GbE ports per node.

- Hybrid system requires either two 10/25GbE ports or four 1GbE ports per node.

- For single node appliance, 10GbE ports will auto-negotiate down to 1GbE for 1Gb solution.

- For single node appliance, additional PCIe NIC can be configured for customer network.

- VLAN and vSphere Network I/O Control (NIOC) are attached to each default port groups.

- Limitation to use 1Gb uplink:

- Minimum 4 1GbE NICs.

- Only support Hybrid-storage configuration

- Maximum supported node count is eight

- Only node with single socket is supported

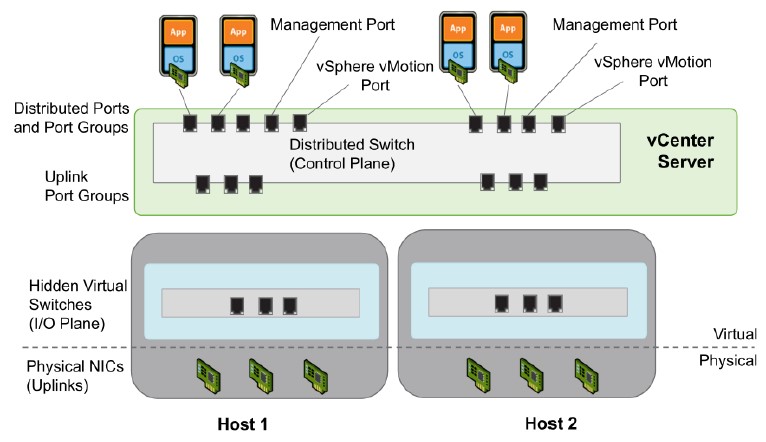

The network requirement is 1 Vmware Distributed vSwitch with multiple default port groups. vSAN relies on VDS for its storage-virtualization capabilities, and the VxRail Appliance uses VDS for appliance traffic.

RAILDistrib

- Management Network (Split to External and Internal Management from 4.7)

- VSAN Network

- vMotion

- Virtual Machine

The port groups will be balanced across the uplink vmnics.

Deployment

Deployment General Rules

- VxRail Appliance is built in factory to a default operating environment

- Site specifications must be configured at time of deployment. Must include IP address range, VLANs, uplinks, etc

- ToR switch must be configured to set for both IPV4 and IPV6

- VxRail Appliance requires a network layer 2 connections for connectivity

- Routing between networks or VLANs occurs at the aggregation layer

Initial configuration through VxRail Manager

- Browse to the VxRail Manager initial IP address and choose “Get Started”

- Provide Management IP interfaces/vCenter details

- Provide vMotion IP interfaces details

- Provide vSAN IP interfaces details

- Provide VM Networks IP interfaces details

- Provide hostname and IP for Log Insight

- Could be saved to Jason file for future deployment.

- Post Health Check through HEALTH status tab of VxRail Appliance using vCenter

Management Notes

VxRail Manager

- Run as a virtual appliance in VxRail.

- Provide VxRail Deployment, Configuration and Node Monitoring.

- Display storage, CPU and memory utilization at cluster/appliance/node level.

- Provide cluster expansion and auto detect new appliance.

- Used to replace failed disk drives

- Leverages VMware vRealize Log Insight to monitor system events and provide ongoing holistic notifications

- A vCenter Server can manage multiple VxRail Appliances.

- VMware part is managed through vCenter.

Proactive rebalance

- Proactive rebalance addresses two typical use cases:

- Adding a new node to an existing Virtual SAN cluster or bringing a node out of decommission state

- Leverage the new nodes even if the fullness of existing disks are below 80%

- Performed through CLI (RVC command): proactive_rebalance –start ~/computers/cluster’

Pingback: DELL EMC VxRail Study Notes (Part 1) | InfraPCS