This post is my personal technical note HP SimpliVity Hyperconverged Solution drafted during my personal knowledge update. This post is not intended to cover all the part of the solution and some note is based on my own understanding of the solution. My intention to draft this note is to outline the key solution elements for quick readers.

The note is based on SimpliVity 380 Gen10 Server OmniStack Release version 3.7.5. Some figures in this post are referenced from HP public documents.

Solution Components:

The SimpliVity solution is composed by below components:

- Hardware: OmniStack node (basic hardware building block)

- Hardware: OmniStack Accelerator Card (OAC, PCIe-based device for offload/acceleration)

- Software: Hypervisor (VMware/Hyper-V/KVM)

- Software: OmniStack Virtual Controller (OVC, single VM per node)

- Software: Arbiter (Software run on Windows)

- Software: vCenter for VMware

Node

Each OmniStack node is composed by below hardware parts and varied by models

- CPU

- Memory

- Physical disk (HDD/SSD) with Hardware RAID controller

- Network adapter (1Gb and 10Gb)

- OmniStack Accelerator Card (OAC)

OmniCube is the name for the turnkey appliance utilizing an OEMed server manufacturer.

OmniStack is the combination of hypervisor, compute, storage, storage network switching, backup, replication, cloud gateway, caching, WAN optimization, real-time deduplication, and more.

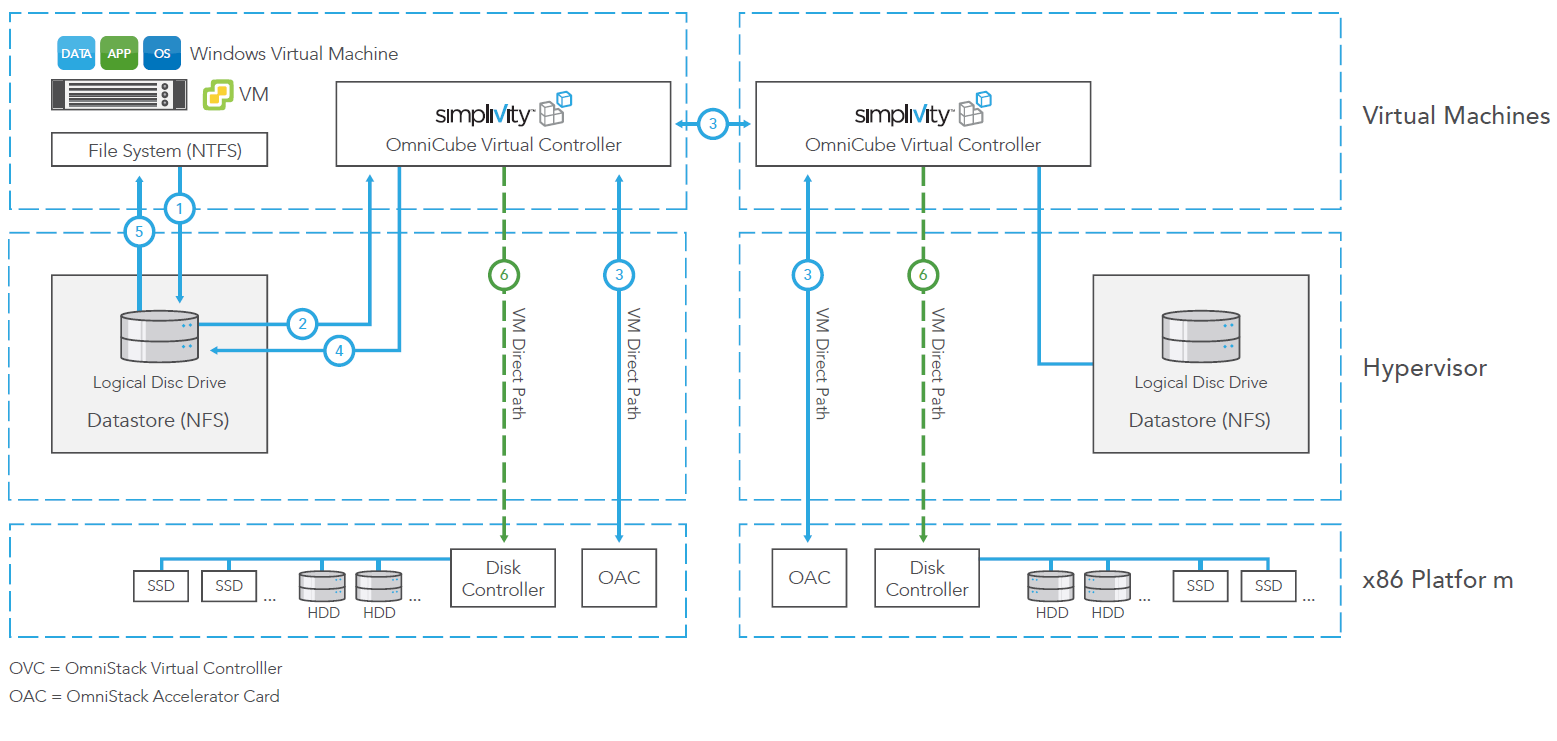

Each OmniStack node includes by below software parts:

- Customized Hypervisor (VMware/Hyper-V/KVM)

- OmniStack Virtual Controller (OVC)

- A VM-based controller running on the hypervisor;

- Every OmniStack node will have a single OmniStack Virtual Controller;

- Hypervisor pass through data to OVC and OVC pass data to physical disk by OAC and disk controller; and

- OVC provide NFS datastore to hypervisor layer.

OmniStack Accelerator Card (OAC)

Purpose-built PCIe based Accelerator card designed by SimpliVity. The Accelerator offloads CPU-intensive functions from the x86 processors, performing in-line deduplication, in-line compression and in-line write optimization.

- FPGA and NVRAM

- Super capacitors to provide power to the NVRAM upon a power loss

- Flash storage used to persist NVRAM after power loss

- All data is deduplicated, compressed and optimized in 4KB-8KB granularity by the OmniStack Accelerator Card inline, before it is written to hard disk.

Cluster, Data Center and Federation

SimpliVity Data Center and Cluster

- SimpliVity Data Center is a similar concept as VMware data center

- 1 or more clusters in single SimpliVity data center

- Single cluster supports up to 1-8 OmniStack nodes

- Single federation supports up to 32 OmniStack nodes

- A single logical structure of storage grouping in each SimpliVity cluster

- SimpliVity Data Center is automatically defined based on VMware vSphere datacenter. (Need create VMware vSphere vCenter and cluster in advance before deployment)

- Stretched cluster in two physical sites is supported

- Under same SimpliVity/vSphere data center and cluster

- high bandwidth (10Gbps), low latency (<1ms) Interconnect

- Arbiter is required

- VMware HA restart VM after the site failure

SimpliVity Federation

- Networked collection of one or more SimpliVity Data Centers (clusters).

- At least two OmniStack nodes for single federation

- Single federation supports up to 32 OmniStack nodes

- Logical construct that allows the management.

- Under same federation, the data can move between sites without the need to remove the data from its deduplicated and compressed format, result efficient movement.

- Two ways to build Federation:

- Single vCenter with multiple vSphere Data Centers/Clusters.

- Multiple vCenter with linked mode, each vCenter have one or more vSphere Data Centers/Clusters.

- SimpliVity recommends implementing multiple vCenter Servers for multiple SimpliVity Data Center configurations to ensure each site maintain full independence

- Automatically determine the full mesh or hub-and-spoke topology.

Arbiter

- Avoid “split brain” scenario.

- Required in every federation.

- The Arbiter provides the tie-breaking vote when a cluster contains an even number of HPE OmniStack hosts. (Especially for two nodes cluster.)

File System

Data Virtualization Platform (DVP)

- A fabric that extends across multiple Omni-Stack nodes in multiple globally distributed SimpliVity Data Centers

- Abstracts the VM data away from the underlying hardware.

- In each cluster, DVP presents this abstracted storage to the hypervisor on each node as a single pool of storage.

- DVP is a logical construct realized by OVC and OAC.

- Unlike other distributed file system, the SimpliVity DVP maintains the complete set of data associated with a VM on two distinct OmniStack nodes within a SimpliVity Data Center. The primary copy on one node and secondary copy on another. VM running outside primary or secondary node will be in non-optimized mode with additional IO hops.

- Across Data Center, DVP allows for a single policy definition for data protection and efficient movement of data between physical locations.

Write IO Data Flow

- VM write data to NFS datastore on primary node

- The write data will be redirected to OVC on primary node

- OVC on primary node replaces the IO to the OVC on secondary node

- Both OVC place the data in DRAM on OAC. DRAM is protected by flash and super-capacitor.

- Once staged to DRAM is complete, the secondary node reply to primary node. Primary node reply to hypervisor the write operation is done.

- Hypervisor acknowledges back to VM that the write operation is complete.

- In parallel, each OAC asynchronized independently deduplicates, compresses, and serializes the data into full RAID stripe writes.

VM Data Placement

- If VMware DRS rule is enabled. The new VM primary copy and secondary copy placement will be determined by VMware DRS integration service, which will consult VDP platform service.

- A DRS rule will be created for the VM to mark it “Should run” on the Primary and secondary SimpliVity Nodes, which is the most efficiency path.

- Therefore, any vMotion and HA auto start will put VM on the primary or secondary node defined by DRS rules.

- If VMware DRS rule is not enabled, the DRS rule will not be setup. Therefore, if a vMotion happened to move VM to the node with no local data copy, a vCenter alert will be popped up with warning “VM Data Access Not Optimized”, this is to alert no local copy is available.

- Reference Reading as below:

- https://community.hpe.com/t5/Shifting-to-Software-Defined/How-VM-data-is-managed-within-an-HPE-SimpliVity-cluster-Part-1/ba-p/7019102#.XDwpL1wzZPY

- https://community.hpe.com/t5/Shifting-to-Software-Defined/How-VM-data-is-managed-within-an-HPE-SimpliVity-cluster-Part-2/ba-p/7022921#.XDg9A1wzZPY

……Continue read on second part as below link

Pingback: HP SimpliVity Study Notes (Part 2) | InfraPCS