This post is my personal study technical note Nutanix Hyperconverged Solution drafted during my personal knowledge update. This post is not intended to cover all the part of the solution and some note is based on my own understanding of the solution. My intention to draft this note is to outline the key solution elements for quick readers.

The note is the second part of the whole note. The first post is per the below link:

Data Resiliency

Redundancy Factor

- RF2 (minimum 3 nodes) or RF3 (minimum 5 nodes)

- If the data is RF2, the metadata is RF3; if the data is RF3, the metadata is RF5.

- RF peers are chosen for every episode (1GB of vDisk data) and all nodes participates.

- RF peers are chosen based on a series of factors (capacity, performance and etc.).

- Redundancy Factor is setup at cluster level initially (RF2 by default) and can be modified later via Prism console.

- Each storage container has its own redundancy factor set at creation and can be modified via ncli later (ctr edit name=storage_container_name rf=3)

Data Path redundancy

- Optimized path is local path

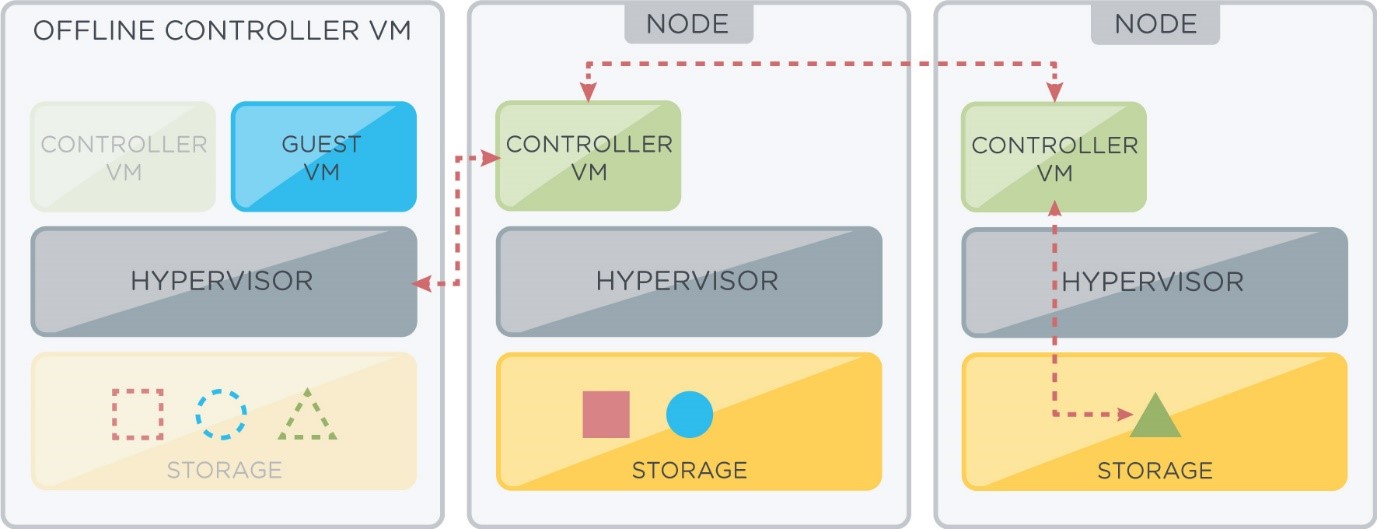

- Local node/Controller VM failure, the CVM will redirect the data to other controller VM.

Failure Scenarios

- Controller VM Failure

- IO will be redirected if one CVM failed 2~3 times in a 30-seconds period.

- In ESXi and Hyper-V: CVM Auto-pathing (ha.py) will be called for re-directing by modify routing table.

- In AHV: iSCSI multi-pathing is leveraged to re-direct IO to non-optimized path (other CVMs).

- VM will hang until the redirection completed.

- Two Controller VM failure in same time may result data lost for some VMs.

- Host Failure

- VM could be restarted by HA on other node with data rebuilt on local storage.

- If the host is down for more than 30 minutes, the related CVM will be removed from the metadata ring.

- Data is rebuilt for HA by curator and customer initiated read.

- Two host failures might result data lost for some VMs.

- Disk Failure

- Boot Drive Failure: Cause CVM failure. Data path redirected to other CVM.

- Metadata Drive Failure: Storage show as missing and VM show hang until the data path redirected to other CVM.

- Data Drive Failure: Single drive failure won’t cause impact with rebuild starting. Dual drive failure may result data lost for some VMs.

- VCM will tried to bring disks up to three times if the SMART test pass.

Single Node Cluster

- SSD failure: Single SSD failure will put cluster into read-only mode

- HDD failure: Same as normal cluster

Availability Domain is provided for Block and Rack awareness to enhance data availability.

Data Rebuild process will be started immediately once the failure is determined.

Data Resiliency Status window in Prism setting provide the details of the resiliency status information.

License

- License Supported

- Starter License – default shipped with each node, do not expire. No MapReduce.

- Pro and Ultimate License – Need generate/download/install license

- Prism central/pro License – Licensed per node

- Add On License – For individual feature. For each node.

- Contact Nutanix support to downgrade license/destroy cluster

- Reclaim your license before destroying a cluster.

- Mix license type in a cluster will get the lowest license type enabled.

- Node license is required when adding node (upload cluster summary file and generate license)

- Reclaim license when:

- Destroying a cluster

- Removing node from cluster

- Making modification or downgrade

- License management options includes: upgrade/downgrade/rebalance/renew/add/remove.

Nutanix Featured Services

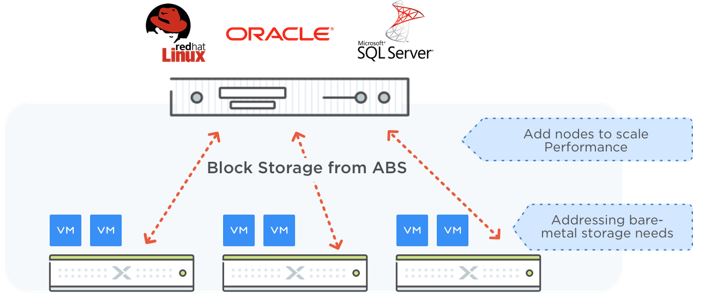

Acropolis Block Service

- Need “External Data Services IP Address” in Nutanix Cluster setting. (Load Balancing)

- Support iSCSI protocol

- Use “Volume Group” to map vdisks to host initiator.

- MPIO was supported but ABS is preferred.

- Volume Group Clone could benefit database testing and etc.

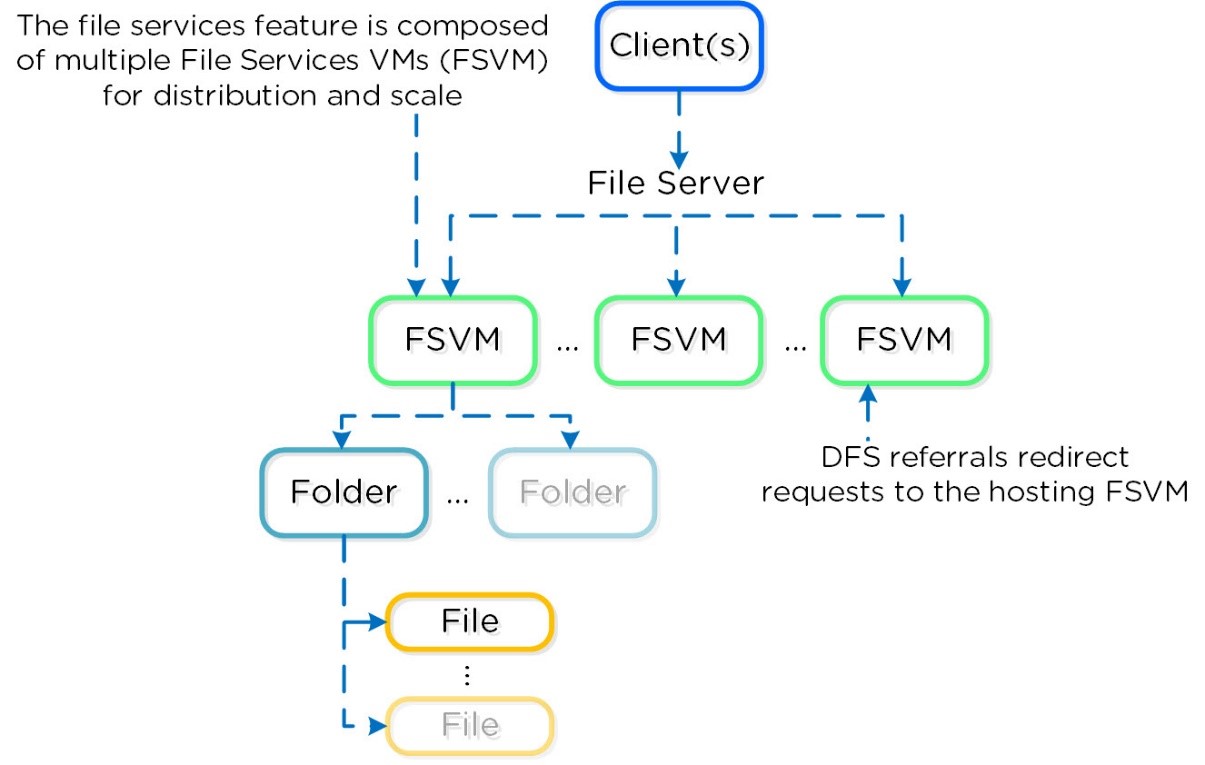

Acropolis File Service

- Scale-out arch to provide SMB to Windows for home and user profiles.

- Consists of three or more file server VMs (FSVM) with only one file server per cluster node.

- A set of FSVM compose Acropolis File Services cluster.

- Multi File Service clusters can be created on a cluster.

- DFS to host share folders in different hosts (Benefit performance and capacity balancing).

- Snapshot is taken every hour and retain 24 copies.

- Support Share/Directory/File level Security control list (NTACLs)

- New Nutanix container “NutanixManagementShare” for AFS.

- Use both internal and external networks

- Internal Network: FSVM and CVM

- External Network: Client and FSVM, FSVM to AD/DNS.

- High Availability

- ABS to handle path failure

- FSVM assume resource of the failed VM

- Access Based Enumeration is supported (need user to reconnect).

- Quota can be setup on user/group level.

- Email notification for quota

- 90% Warning email

- 100% Alert email

- For soft limit (enforcement), email notification is sent every 24 hours after 100%

- Quota enforcement begins several minutes after policy creation.

- Nutanix perform health check and recommend performance optimization automatically.

- Perform cache flush before performance optimization by command: dfsutil cache referral flush.

Acropolis Container Service

- Provision multiple VMs (normally 3 VMs, 2 VMs for 3-node cluster) as container machines to run Docker containers on them.

- Docker do not store data persistently. Data persistence is achieved by using the Nutanix Volume Plugin which will leverage Acropolis Block Services to attach a volume to the container VM.

- Upgrade software to enable Docker service.

Network Configuration

Each node requires at least three IP addresses:

- IPMI interface

- Hypervisor host

- Nutanix Controller VM

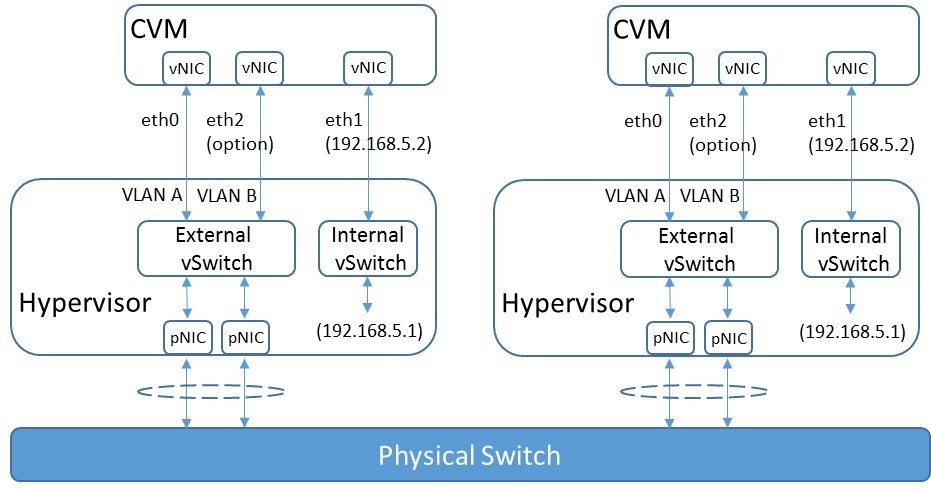

Two vSwitch will be created by default (ESXi as example):

- vSwitch0 (Hyper-V: ExternalSwtich; AHV: br0) – two uplinks

- Management Network (HA, vMotion, vCenter communication, SSH and prism)

- ESXi host vmk0

- Controller VM eth0

- CVM Backplane Network (Optional segment network topology. Eth0 will act the roles if eth2 is not defined.)

- Controller VM eth2

- VM Network

- Management Network (HA, vMotion, vCenter communication, SSH and prism)

- vSwitchNutanix (Hyper-V: InternalSwtich; AHV: virbr0) – no uplink, internal communication between CVM and ESXi.

- vmk-svm-iscsi-pg

- ESXi host vmk1 (192.168.5.1)

- svm-iscsi-pg

- Controller VM eth1 (192.168.5.2)

- Controller VM eth1:1 (192.168.5.254)

- vmk-svm-iscsi-pg

Controller VM must be able to talk to the IPMI interfaces, ESXi hosts and each other CVM in same cluster.

Continue to read …

Pingback: Nutanix Study Notes (Part 3) | InfraPCS

Pingback: Nutanix Study Notes (Part 1) | InfraPCS