This post is my personal study technical note DELL EMC VxRail Hyperconverged Solution drafted during my personal knowledge update. This post is not intended to cover all the part of the solution and some note is based on my own understanding of the solution. My intention to draft this note is to outline the key solution elements for quick readers.

The note is based on VxRail version 4.7. Some figures in this post are referenced from DELL EMC VxRail public documents.

The note is the first part of the whole note.

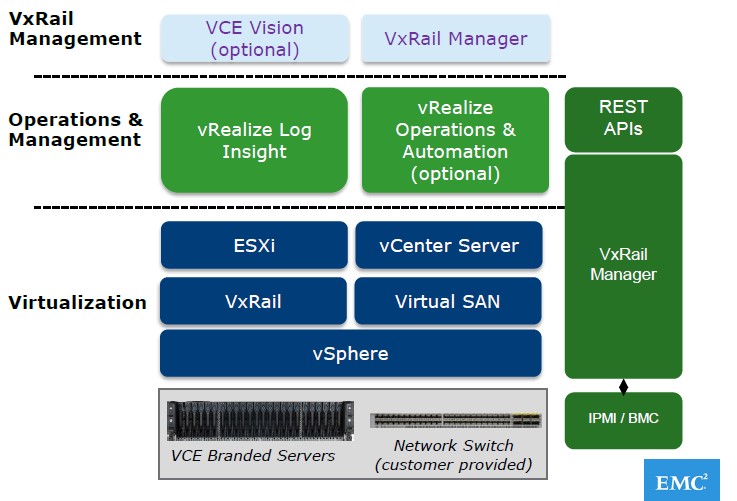

Solution Components:

The VxRail solution is composed by below components:

- Hardware: DELL EMC PowerEgde Platform

- Software: Hypervisor (VMware)

- Software: VMware vCenter

- Software: VMware VSAN

- Software: VMware vRealize Log Insight

- Software: VxRail Manager

- Software: RecoverPoint for VMware (Option)

- Software: CloudArray (Option)

Node

Each VxRail node is composed by below hardware parts and varied by models

- CPU

- Memory

- Physical disk (HDD/SSD) with Hardware RAID controller

- SATADOM Sub Module(32GB) for ESXi OS

- Network adapter (1Gb and/or 10/25Gb)

The nodes types are listed as below:

- Compute Dense (G, 2U4N) – Four identical nodes per appliance

- Low Profile (E, 1U1N)

- Performance Optimized (P, 2UIN)

- VDI Optimized (V, 2UIN, only series that supports GPU card)

- Storage Dense (S, 2UIN)

Storage Disk Drives

Hybrid Configuration

- A single SSD for caching (Cache Tier)

- Multiple HDD for capacity (Capacity Tier)

All-flash Configuration

- SSD for both Cache Tier and Capacity Tier

- A higher-endurance SSD is used for write caching

- capacity-optimized SSDs are used for capacity

Appliance Node-1

- Acts like bootstrap for the appliance

- Initially holds the VCSA

- Contains Log Insight Appliance

- Contains VxRail Orchestration Appliance

- Pre-configured vSAN datastore containing just Node01.

Services running in Node

- vmware-marvin service :

- Apache Tomcat instance that runs on the VxRail Manager virtual machine to provides VxRail management interface.

- /etc/init.d/vmware-marvin <stop> <start> <restart>

- Configuration file: config-static.json.

- vmware-loudmouth service:

- Service that discovers and configures nodes on the network during Initial configuration and appliance expansion.

- Assists in replacing failed nodes

- Use Multicast

- /etc/init.d/loudmouth <stop> <start> <restart>

- Runs on both the VxRail Manager VM

- Runs on each ESXi server.

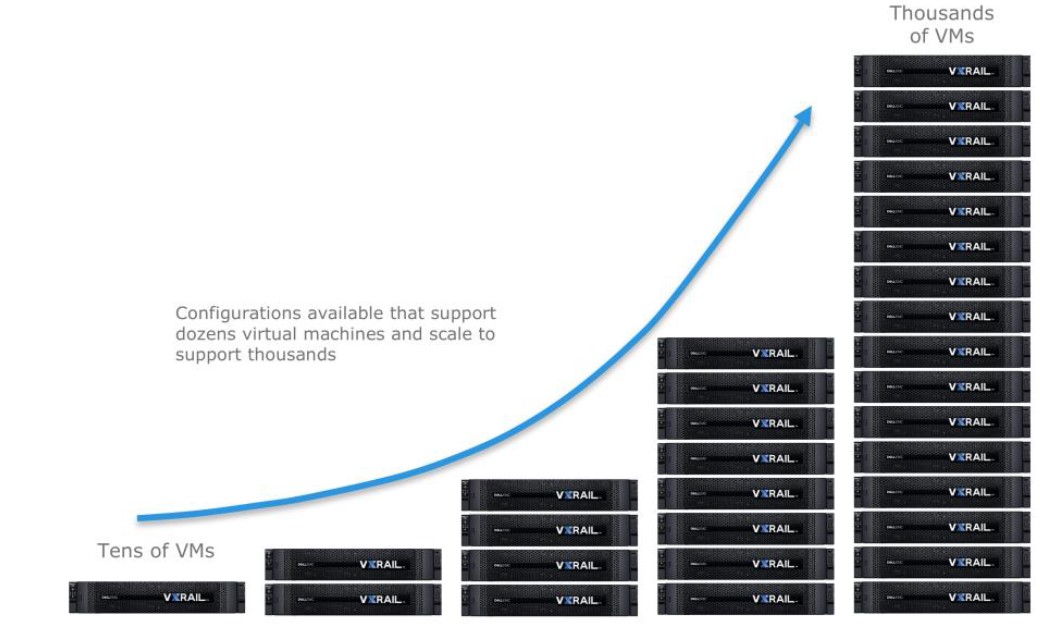

Cluster

Cluster General Rules

- Single cluster supports 3-64 nodes

- Mixing the node type in single cluster is supported, but the first 4 nodes need same CPU/Memory/Storage.

- The storage configuration in single cluster need to be same (All Flash or All Hybrid)

- Mixing 1GbE and 10GbE is not supported.

- Support expanding one node each time.

- Each VxRail cluster is a unique and independent vSAN data store within vCenter.

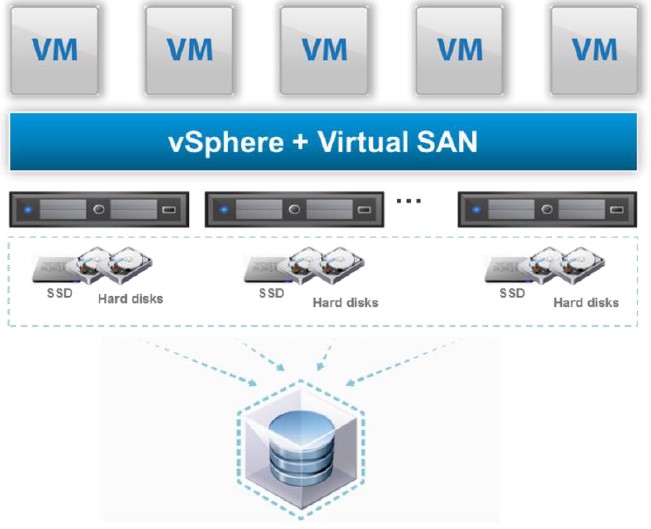

File System

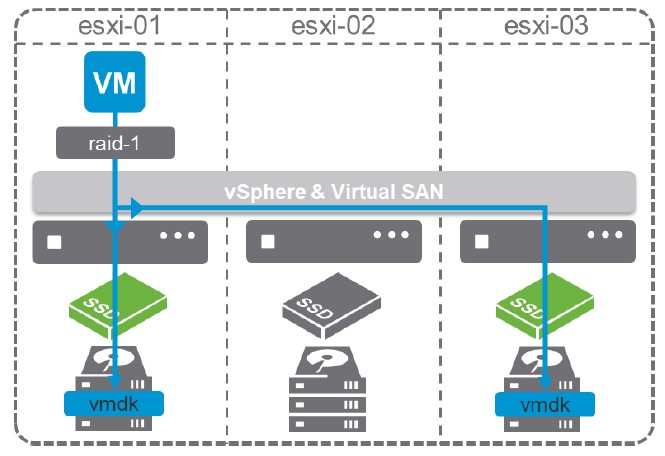

VxRail leverages VMware vSAN

- vSAN aggregates locally attached disks of hosts in a vSphere cluster to create a pool of distributed shared storage

- vSAN is embedded in the ESXi kernel layer

- Little impact on CPU utilization (less than 10 percent)

- Self-balance based on workload and resource availability

- vSAN is preconfigured when the VxRail system is first initialized

- Distributed, persistent cache on flash across the cluster

- Utilize clustered metadata database and monitoring service (CMMDS). All hosts must contain an identical copy of the metadata

- All VMs deployed with vSAN are set with a default availability policy that ensures at least one additional copy of the VM data is available.

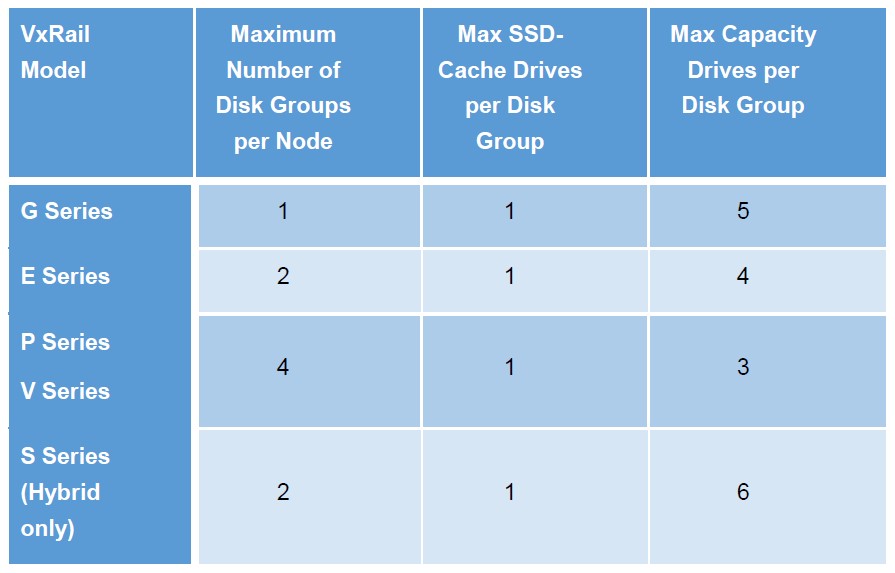

Disk Group

- Disk drives in each VxRail host are organized into disk groups

- Each disk group requires one and only one high-endurance SSD flash-cache drive.

- For VxRail, the SSD cache drive must be installed in designated slots

Read Cache

- Organized in terms of cache lines, current size is 1MB. (Filled with multiple 16KB chunks.)

- vSAN maintains in-memory metadata that tracks the state of the RC.

- Read cache contents are not tracked across power-cycle operations of the host.

- Read order: Client read cache, SSD write cache, SSD read cache, disks.

- Client Cache

- Used on both hybrid and all-flash configurations.

- It leverages local DRAM server memory (client cache) within the node to accelerate read performance.

- The amount of memory allocated is .4% -1GB per host.

- SSD Cache

- Only exists in hybrid configurations.

- For a given VM data block, vSAN always reads from the same replica/mirror. No need to be local replica.

Write Cache

- Write cache is 30% of device capacity.

- Write cache is circular buffer

- SSD Cache

- Non-volatile write buffer

- Data write to two different SSD cache

Data Placement

- Managed by Storage Policy (SPBM, Storage Policy Based Management)

- Administrators can dynamically change a VM storage policy

- Storage Policy Attribute

- Number of disk stripes per object (1(default)-12, stripe size is 1MB.)

- Flash read cache reservation (Default 0, automated)

- Failures to tolerate (Default 1. Maximum 3. If Erase coding enabled, FT1=RAID5, FT2=RAID6.)

- Failure Tolerance Method (Performance or Capacity-Erase-coding)

- IOPS limit for object (QoS, default is no limit)

- Disable object checksum (4K chunk with 5 byte checksum, default enabled)

- Force provisioning (Default is NO)

- Object space reservation (The default value is 0%.)

- Sparse Swap can help to reduce memory overload in vSAN. (Disable vSAN swap thick provision)

Continue to read……

Pingback: DELL EMC VxRail Study Notes (Part 2) | InfraPCS